In the previous post, I explored a Parquet on S3 design with tombstones for constant time deletes and a CAS updated manifest for snapshot isolation. This post extends that design. The focus is in file delete operations where we replace a Parquet row group and publish a new footer using S3 Multipart Upload (MPU) and UploadPartCopy without having to download and rebuild unchanged bytes. We preserve the same system model with immutable data files and a manifest pointer that serializes visibility.

We’re not replacing tombstones—we’re defining when a targeted row‑group rewrite is warranted, under what constraints, and how it fits the tiny‑catalog (manifest) model.

Goals and constraints

What I am optimizing for

- Minimize data movement (no client egress for unchanged bytes)

- Preserve snapshot isolation (readers see a coherent version)

- Keep moving parts small (S3 + a tiny manifest/pointer)

- Make deletes predictable in latency (bounded by a handful of S3 requests)

What the system gives us and expects from us

- S3 Multipart Upload with

UploadPartCopyfor server-side range copies - Atomic assembly on

CompleteMultipartUpload(new object key) - Parquet footer is the source of truth for row groups and offsets

- 5 MiB minimum size for all non-last parts in MPU

- With versioning ON, older versions persist until explicitly deleted

- Readers doing range requests should send

If-Match: <etag>to avoid mixing versions

Recap: Parquet file + table setup

A Parquet file has a data section split into row groups and a footer that records schema, per‑group offsets/sizes/stats, optional indexes, then the 4‑byte footer length and the PAR1 tail.

Note: We use those absolute offsets later (rg_start, rg_end, footer_start).

Parquet file (compressed ~128–256 MiB)

┌──────────────────────────────────────────────────────────────────────┐

│ DATA SECTION │

│ ┌─────────────┬─────────────┬─────────────┬───────────────┐ │

│ │ Row Group 0 │ Row Group 1 │ Row Group 2 │ ... │ │

│ │ (columns → pages → stats per group) │ │

│ └─────────────┴─────────────┴─────────────┴───────────────┘ │

│ │

│ FOOTER │

│ - schema │

│ - row‑group directory (offsets, sizes, stats) │

│ - optional column/offset indexes │

│ - 4‑byte footer length + PAR1 tail │

└──────────────────────────────────────────────────────────────────────┘

Table shape and read pattern at a glance

A tiny mutable head _latest_manifest (updated with CAS - compare and swap) points to an immutable manifest/vN.json. That manifest lists the current set of write‑once Parquet data files. Readers first fetch the head to learn the version, then fetch that manifest and range‑read only the files it lists. This post (unlike the previous post) does not use tombstones; the manifest alone defines visibility.

Head (CAS) → Snapshot → Data files

┌ _latest_manifest ┐

│ {"version": N} │

└──────┬───────────┘

▼

manifest/vN.json

data_files:

- data/YYYY/MM/DD/HH/<uuid>.parquet

- ...

tombstones: [] # not used here

mytable/

├── _latest_manifest

├── manifest/v00000124.json

└── data/YYYY/MM/DD/HH/<uuid>.parquet

Lets begin: What we are building

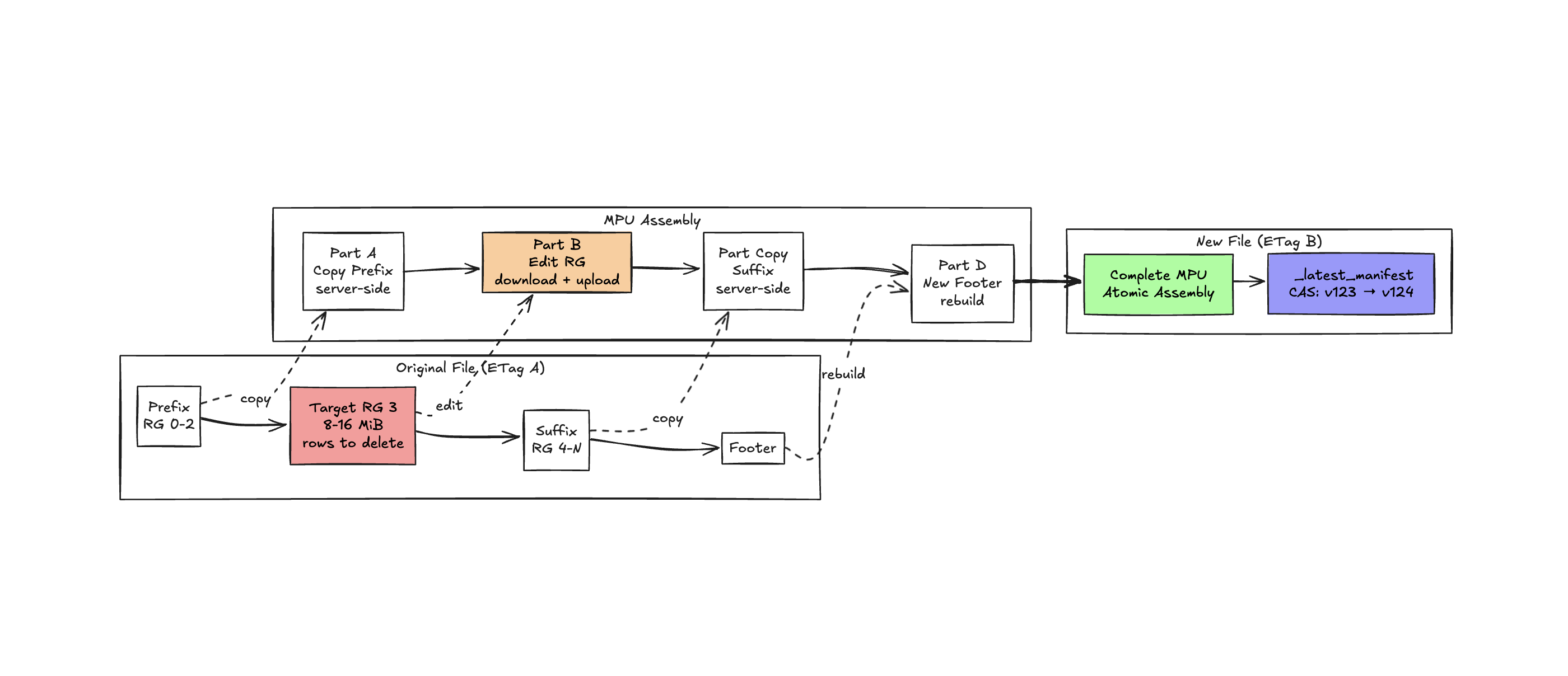

Say we need to delete only a subset of data in a Parquet file inside a row group for a customer request. The objective is to make that change visible atomically without rebuilding the entire object and without downloading unchanged bytes. We keep the system model from the previous post with immutable data files and a CAS updated manifest that serializes visibility. We assemble a new object with MPU (multi part upload) and UploadPartCopy. We download only the target row group and the footer then remove the rows and re encode that group and upload the edited bytes + Parquet footer. Unchanged prefix and suffix bytes are copied entirely within S3.

Layout and sizes

We focus on 128–256 MiB Parquet files with many row groups of say about 8-16 MiB each. This size keeps scan efficiency high and still lets a single edit localize to one row group.

mytable/

_latest_manifest # small, mutable CAS pointer (version + ETag)

data/

2025/10/12/09/f81d4fae.parquet

2025/10/12/10/a1b2c3d4.parquet

...

Head points to the current list of data files. Mutations rewrite only the file that contains affected rows and then update _latest_manifest last.

Mutable head + data files

┌ _latest_manifest (CAS) ┐

│ {"version": 123} │

└──────┬──────────┘

│ points to

▼

manifest/v00000123.json → {

data_files: [

"data/2025/10/12/09/f81d4fae.parquet",

"data/2025/10/12/10/a1b2c3d4.parquet",

...

],

tombstones: [...]

}

Rewrite only the object that contains the row group, then CAS head to a list with the new object key.

We pack data into 128–256 MiB Parquet objects with many row groups (≈8–16 MiB each). On deletion, we splice a new object via MPU by copying the prefix/suffix and uploading the edited row group and a new footer.

Implementation: Step-by-step replacement of row group

- Locate row → (object key, row_group_idx, row_idx)

- Range GET the footer and only the target row group

- Decode the downloaded row group then re encode it without the row then compute new stats

- Start MPU for destination object

UploadPartCopy(prefix up to row group)UploadPart(modified row group) # ≥ 5 MiB if not last partUploadPartCopy(suffix after row group)UploadPart(new footer) # last part can be small- Complete MPU (new object ready)

- Atomically swap

_latest_manifest(compare-and-swap on ETag) - Optionally delete old object/version

Readers fetch _latest_manifest once, then read only what it references. They never combine old and new.

Byte range view

bytes: 0 rg_start rg_end footer_start end

│──────────────────────────────────────│█████ edited ███│──────────────────│████ footer (new) ███│

│ PREFIX (copy) │ RG (upload) │ SUFFIX (copy) │ len + PAR1 │

How the object is assembled

We use offsets discovered from the Parquet footer:

rg_start: absolute byte offset of the first byte of the target row group (spans all columns for that group)rg_end: absolute byte offset immediately after the target row groupfooter_start: absolute byte offset of the footer region

The prefix (bytes=0…rg_start-1) and suffix (bytes=rg_end…footer_start-1) are copied with UploadPartCopy via CopySourceRange. The final part contains the regenerated Parquet footer bytes followed by the 4‑byte footer length and the trailing PAR1, and is uploaded last.

S3 MPU Assembly (new key)

┌───────────────────────────────────────────────────────────────────────┐

│ Existing Object │

│ [ PREFIX ][ ROW GROUP ][ SUFFIX ] │

│ bytes 0 … rg_start-1 rg_start…rg_end rg_end…footer_start │

└───────────────────────────────────────────────────────────────────────┘

Parts:

A: UploadPartCopy(PREFIX) ← server-side, no egress

B: UploadPart(edited RG) ← ≥ 5 MiB (non-last part rule)

C: UploadPartCopy(SUFFIX) ← server-side, no egress

D: UploadPart(new FOOTER+len+PAR1) ← last part can be tiny

CompleteMultipartUpload → new object assembled; visibility flips on manifest CAS.

Client and S3 sequence

Client S3

│ GET footer │

│────────────────────────▶│ discover ranges & stats

│ │

│ Create MPU │

│────────────────────────▶│

│ Part A: Copy PREFIX │

│────────────────────────▶│ (UploadPartCopy)

│ Part B: Upload RG │

│────────────────────────▶│ (UploadPart ≥ 5 MiB)

│ Part C: Copy SUFFIX │

│────────────────────────▶│ (UploadPartCopy)

│ Part D: Upload FOOTER │

│────────────────────────▶│ (UploadPart last)

│ Complete MPU │

│────────────────────────▶│ assemble new object (not visible yet)

│ │

│ Reader uses If-Match │

│◀────────────────────────│ range `GET`s pinned to `ETag`

This sequence captures the exact order of operations. You discover ranges from the footer, start a multipart upload (MPU), copy the prefix, upload the edited row group, copy the suffix, upload the footer as the last part, and then complete the upload. Readers pin to a single ETag so range requests stay consistent during a scan.

Re‑encoding and footer building

Re‑encoding a row group means decoding the pages for that group, removing the targeted rows, and writing new pages for each column using the same Parquet encodings. Page boundaries can change when rows are removed. That is expected and supported by the format. A new footer is then written that references the new absolute offsets, the new sizes, the updated statistics, and optional index sections for the edited group. Building a footer is not an unconventional trick. Parquet libraries in Java, C++, and Rust expose programmatic writers that let you construct a file from buffers and metadata. The careful part is computing the correct absolute offsets and keeping index offsets in sync if you use column and offset indexes.

Conceptual implementation

The conceptual implementation would then look something like this:

- Read Parquet footer and locate the target

row_group_idxentry. - Range GET the row group byte window

[rg_start, rg_end). - Decode pages for each column in the group; remove targeted rows; re‑encode pages using the same encodings/compression.

- Compute

new_rg_sizefor the edited group;delta = new_rg_size - (rg_end - rg_start). - Rebuild footer:

- Update total row count.

- Update per‑column stats for the edited group.

- Keep prior groups unchanged.

- Shift absolute offsets for all following groups by

delta. - Optionally rebuild column/offset indexes for the edited group; others are unchanged.

- Assemble the object with MPU: copy prefix

[0, rg_start), upload edited RG, copy suffix[rg_end, footer_start), upload new footer+len+PAR1 last.

Offset math

Let RGi.offset be the absolute byte offset of row group i in the file. Let k be the edited group.

old_rg_size = rg_end - rg_startdelta = new_rg_size - old_rg_size- For

i < k:RGi.offset' = RGi.offset(unchanged) - For

i = k:RGk.offset' = rg_start(size changes internally) - For

i > k:RGi.offset' = RGi.offset + delta footer_start' = footer_start + delta

Stats and optional index offsets for i > k shift by delta if they are stored as absolute offsets; if stored relative to group start they remain the same.

API specifics

Use CopySourceRange with the form bytes=first-last for prefix and suffix copies. For example bytes=0-1048575 copies the first 1 MiB. The source object must be larger than 5 MiB for a range copy and every non last part including copied parts must meet the 5 MiB minimum. Here MiB means mebibyte which is 1,048,576 bytes.

Why Parquet still works here

Parquet encodes columns inside row groups and stores a footer with offsets and statistics. When you rebuild one row group you change only the bytes for that group across all columns, you shift the absolute offsets for the groups that follow by the size delta, and you update the total row count and the per column statistics for the edited group. Everything else is copied as is with UploadPartCopy and range reads stay large and coalesced.

Caveats and gotchas

S3 and MPU

- Every non‑last part (including copied parts) must be ≥ 5 MiB (S3 requirement); widen the edited window or write a full new object otherwise.

- Copy ranges are inclusive (

bytes=first-last); an off‑by‑one will corrupt layout. - Assemble to a new key; do not mutate in place. Abort failed MPUs to avoid billed leftovers.

- Treat MPU ETags as version tokens; they are not MD5 hashes.

Parquet specifics

- You must rebuild the footer: update edited‑group stats, total rows, and shift absolute offsets after the edit.

- If the file uses column/offset indexes or bloom filters, regenerate for the edited group or omit them (still valid).

- Be careful with stats semantics (logical types, NaN ordering) to avoid wrong pruning.

Operational

- Use CAS on the manifest to flip visibility; readers never guess filenames.

- Garbage‑collect orphaned objects created by lost CAS races.

- Batch edits by row group and perform one rewrite per group to spread the cost.

Atomicity

The mutable _latest_manifest gives snapshot isolation. Readers see a coherent table version as of the head they fetched; new writes are invisible mid-scan. For immediate erasure in versioned buckets, delete prior versions or use per‑object encryption and drop old keys.

A mid‑file row‑group edit behaves the same as any rewrite: the new object is assembled atomically; readers pin scans with If-Match on the observed ETag.

View of the head swap

Before commit

_latest_manifest → v123

parquet object @ ETag A

After commit

_latest_manifest → v124

parquet object @ ETag B

Readers pin to a single ETag while scanning

When to choose what

- Use tombstones with a manifest when deletes are frequent and small and you can compact later. This gives very fast commits and minimal writes.

- Use

MPUrow group rewrite when you need immediate byte removal and the edited group is≥ 5 MiB. This avoids rewriting the whole file. - Use whole file rewrite when the file is small or widening the middle part is awkward. A single

PUTis straightforward.

When this fits and when it doesn’t

This pattern fits append‑heavy analytics on S3 where scans project a few columns, and you sometimes need immediate erasure of specific rows. It works well if you’re comfortable owning a small manifest and a simple GC job. It’s not a great fit for OLTP‑style point lookups without a PK locator, high‑churn per‑row updates, or heavy schema evolution and catalog features—in those cases, a full metadata layer like Iceberg or Delta is usually the better choice perhaps?

Closing

This keeps the parts small and sharp: immutable data objects, a tiny CAS head, and localized rewrites using MPU + UploadPartCopy. You get Parquet’s scan speed, S3’s range‑read economics, and immediate hard deletes without a coordinating service. Taken together with the previous post, I’d say these concepts get you close to a database built on modern cloud‑storage APIs for the right patterns while keeping operations simple (mostly) :D.