Recently I have been working with some queries in Postgres where I noticed either it has decided not to use an index and perform a sequential scan, or it decided to use an alternative index over a composite partial index. This was quite puzzling, especially when you know there are indexes in the system that can perform these queries faster.

So what gives?

After some research, I stumbled upon random_page_cost (ref).

What is Random Page Cost?

Imagine looking for a specific book in a library. Reading through books sequentially is like a sequential scan in a database, while jumping directly to the desired book is like a random access. The random_page_cost reflects the relative cost of random access compared to sequential access in the database. When RPC is high, Postgres thinks fetching data randomly is expensive, so it decides to read everything in a sequence (checking every book on the shelf) instead. This settings also impacts whether it decides to use a full index vs a partial or composite index. We will see more on this below.

Diving Deeper

The magic lies in how the PG uses this value. A higher RPC (random_page_cost) discourages the use of indexes, favoring sequential scans. In Aurora Postgres 14.11, currently the the default random_page_cost is 4.0. However, with modern storage technologies the cost of random access is much lower than with traditional spinning disks. By adjusting the RPC to reflect this reality, we can encourage the engine to leverage indexes more effectively, potentially leading to significantly faster queries.

Seeing is Believing: Before and After Examples

Let’s illustrate the impact of lowering RPC with a concrete but anonymized example. Here I am using EXPLAIN ANALYZE command to compare the query plan before and after adjusting the RPC on the same query. This LATERAL JOIN query aims to find a limited number of rows associated with a table called table_1, ordered by their IDs, while excluding entries where a completed_at has a value / is not null.

Now, there are two indexes present - one is the standard index called table_2_pkey which is an index on the primary key in table_2. The second is a composite partial index on table_2 where the index is on table_1_id, id and on the condition where (completed_at IS NULL).

Before (RPC = 4.0):

With the default RPC, the execution time of the query is ~11s. This clearly is very long and doesn’t scale for us. The explain looks like the following:

Nested Loop (cost=0.42..95.02 rows=1000 width=117) (actual time=0.115..11286.991 rows=1000 loops=1)

Buffers: shared hit=88534

-> Seq Scan on table_1 (cost=0.00..5.20 rows=2 width=8) (actual time=0.017..0.023 rows=2 loops=1)

Filter: (id = ANY ('{150,250}'::bigint[]))

Rows Removed by Filter: 14

Buffers: shared hit=5

-> Limit (cost=0.42..34.91 rows=500 width=117) (actual time=5149.715..5643.379 rows=500 loops=2)

Buffers: shared hit=88529

-> Index Scan using table_2_pkey on table_2 (cost=0.42..20413.13 rows=295949 width=117) (actual time=5149.713..5643.321 rows=500 loops=2)

Filter: ((completed_at IS NULL) AND (table_1_id = table_1.id))

Rows Removed by Filter: 146339

Buffers: shared hit=88529

Planning:

Buffers: shared hit=1

Planning Time: 0.204 ms

Execution Time: 11287.091 ms

Here, PG performs a sequential scan on the table_1 table, followed by an index scan on the table_2 using a full index. This is because PG is determining the composite index to be more expensive with the default RPC of 4.0 as mentioned above.

After (RPC = 1.1):

Now, by lowering the RPC to better reflect the capabilities of the storage system, PG chooses to use the composite index as it deems it less taxing.

Now PG is able to perform the same query in just less than <2ms (!!)

Nested Loop (cost=0.56..57.04 rows=1000 width=117) (actual time=0.063..1.401 rows=1000 loops=1)

Buffers: shared hit=344

-> Index Only Scan using table_1_pkey on table_1 (cost=0.14..1.67 rows=2 width=8) (actual time=0.013..0.017 rows=2 loops=1)

Index Cond: (id = ANY ('{150,250}'::bigint[]))

Heap Fetches: 2

Buffers: shared hit=3

-> Limit (cost=0.42..17.69 rows=500 width=117) (actual time=0.032..0.585 rows=500 loops=2)

Buffers: shared hit=341

-> Index Scan using idx_complete_table_2_on_table_1_id_id_where_completed_at_is_nul on table_2 (cost=0.42..10022.74 rows=290287 width=117) (actual time=0.030..0.531 rows=500 loops=2)

Index Cond: (table_1_id = table_1.id)

Buffers: shared hit=341

Planning:

Buffers: shared hit=127

Planning Time: 0.647 ms

Execution Time: 1.496 ms

You can read more about Aurora/RDS recommendation here: https://docs.aws.amazon.com/prescriptive-guidance/latest/tuning-postgresql-parameters/random-page-cost.html

Results

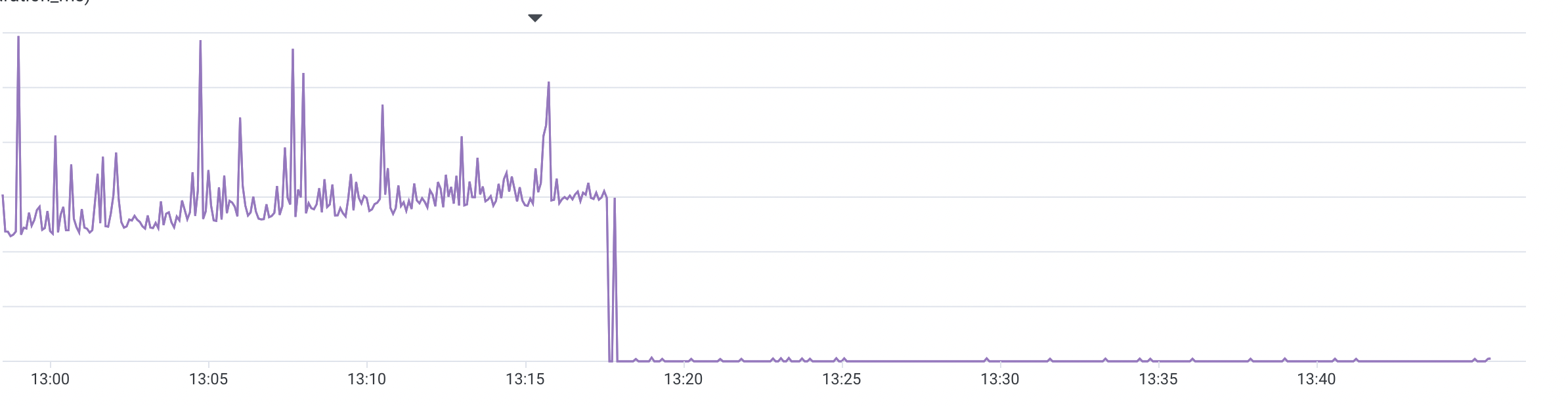

A good looking graph

Example

Here is a short example in a Rails app, how you can perform it on a per query/connection basis.

class ApplicationRecord < ActiveRecord::Base

# ...

def with_minimized_page_cost(&block)

ActiveRecord::Base.connection.exec_query("SET random_page_cost=1.1")

yield

ensure

ActiveRecord::Base.connection.exec_query("RESET random_page_cost;")

end

# ...

end

..

ApplicationRecord.with_minimized_page_cost do

# Perform your queries here

end

Some non solicited advise

Tune the RPC with caution. While it worked great in this case, its worth making sure that you should only do this for queries where you are certain that an index exists. For instance, we could actually make things worse here if the index was broken/invalid.

Wrapping it up

I’d be really curious if Aurora would decide to lower the default RPC to something between 1 to 2 here, since this is not the first time I have seen benefits by lowering the default RPC.